GPUStack is an open-source GPU cluster manager designed for running large models, including LLMs, embedding models, reranker models, vision language models, image generation models, as well as STT and TTS models. It allows you to create a unified cluster by combining GPUs from diverse platforms, such as Apple Macs, Windows PCs, and Linux servers.

Using Docker to install GPUStack on Linux is the recommended method. It simplifies the installation process by avoiding many dependency and compatibility issues.

Today, we bring you a tutorial on how to to set up the NVIDIA container runtime and deploy GPUStack with Docker.

Requirements

Verify you have NVIDIA GPU:

xxxxxxxxxxlspci | grep -i nvidiaVerify the system has gcc Installed:

xxxxxxxxxxgcc --version

Installing NVIDIA driver

Refer to: https://developer.nvidia.com/datacenter-driver-downloads

Install the kernel headers and development packages for the currently running kernel:

xxxxxxxxxxsudo apt-get install linux-headers-$(uname -r)Install the cuda-keyring package:

xxxxxxxxxxwget https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2204/x86_64/cuda-keyring_1.1-1_all.debsudo dpkg -i cuda-keyring_1.1-1_all.debInstall NVIDIA driver:

xxxxxxxxxxsudo apt-get updatesudo apt-get install nvidia-open -yReboot the system:

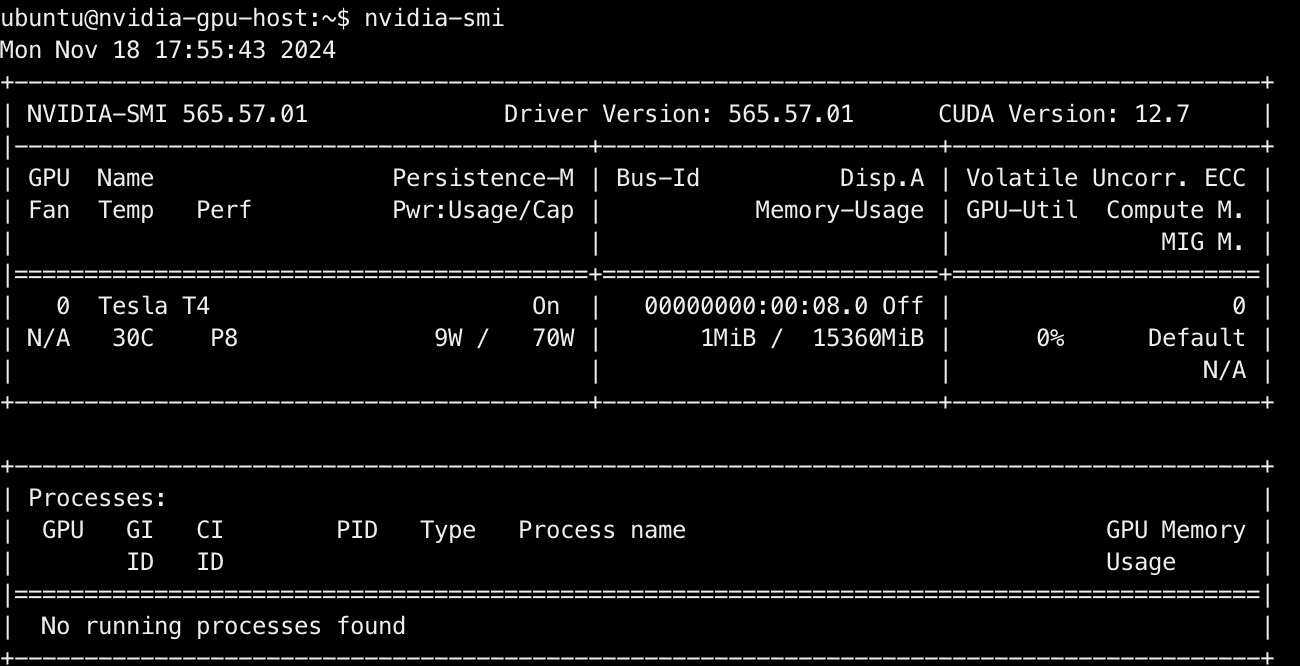

xxxxxxxxxxsudo rebootLog in again and check the nvidia-smi command is available:

xxxxxxxxxxnvidia-smi

Installing Docker Engine

Refer to: https://docs.docker.com/engine/install/ubuntu/

Run the following command to uninstall all conflicting packages:

xxxxxxxxxxfor pkg in docker.io docker-doc docker-compose docker-compose-v2 podman-docker containerd runc; do sudo apt-get remove $pkg; doneSet up Docker's apt repository:

xxxxxxxxxx# Add Docker's official GPG key:sudo apt-get updatesudo apt-get install ca-certificates curlsudo install -m 0755 -d /etc/apt/keyringssudo curl -fsSL https://download.docker.com/linux/ubuntu/gpg -o /etc/apt/keyrings/docker.ascsudo chmod a+r /etc/apt/keyrings/docker.asc

# Add the repository to Apt sources:echo \ "deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.asc] https://download.docker.com/linux/ubuntu \ $(. /etc/os-release && echo "$VERSION_CODENAME") stable" | \ sudo tee /etc/apt/sources.list.d/docker.list > /dev/nullsudo apt-get updateInstall the Docker packages:

xxxxxxxxxxsudo apt-get install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin -yCheck the Docker is available:

xxxxxxxxxxdocker info

Installing NVIDIA Container Toolkit

Refer to: https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/latest/install-guide.html

Configure the production repository:

xxxxxxxxxxcurl -fsSL https://nvidia.github.io/libnvidia-container/gpgkey | sudo gpg --dearmor -o /usr/share/keyrings/nvidia-container-toolkit-keyring.gpg \ && curl -s -L https://nvidia.github.io/libnvidia-container/stable/deb/nvidia-container-toolkit.list | \ sed 's#deb https://#deb [signed-by=/usr/share/keyrings/nvidia-container-toolkit-keyring.gpg] https://#g' | \ sudo tee /etc/apt/sources.list.d/nvidia-container-toolkit.listInstall the NVIDIA Container Toolkit packages:

xxxxxxxxxxsudo apt-get updatesudo apt-get install -y nvidia-container-toolkit -yConfigure the container runtime by using the nvidia-ctk command:

xxxxxxxxxxsudo nvidia-ctk runtime configure --runtime=dockerCheck the daemon.json file:

xxxxxxxxxxcat /etc/docker/daemon.jsonRestart the Docker daemon:

xxxxxxxxxxsudo systemctl restart dockerVerify your installation by running a sample CUDA container:

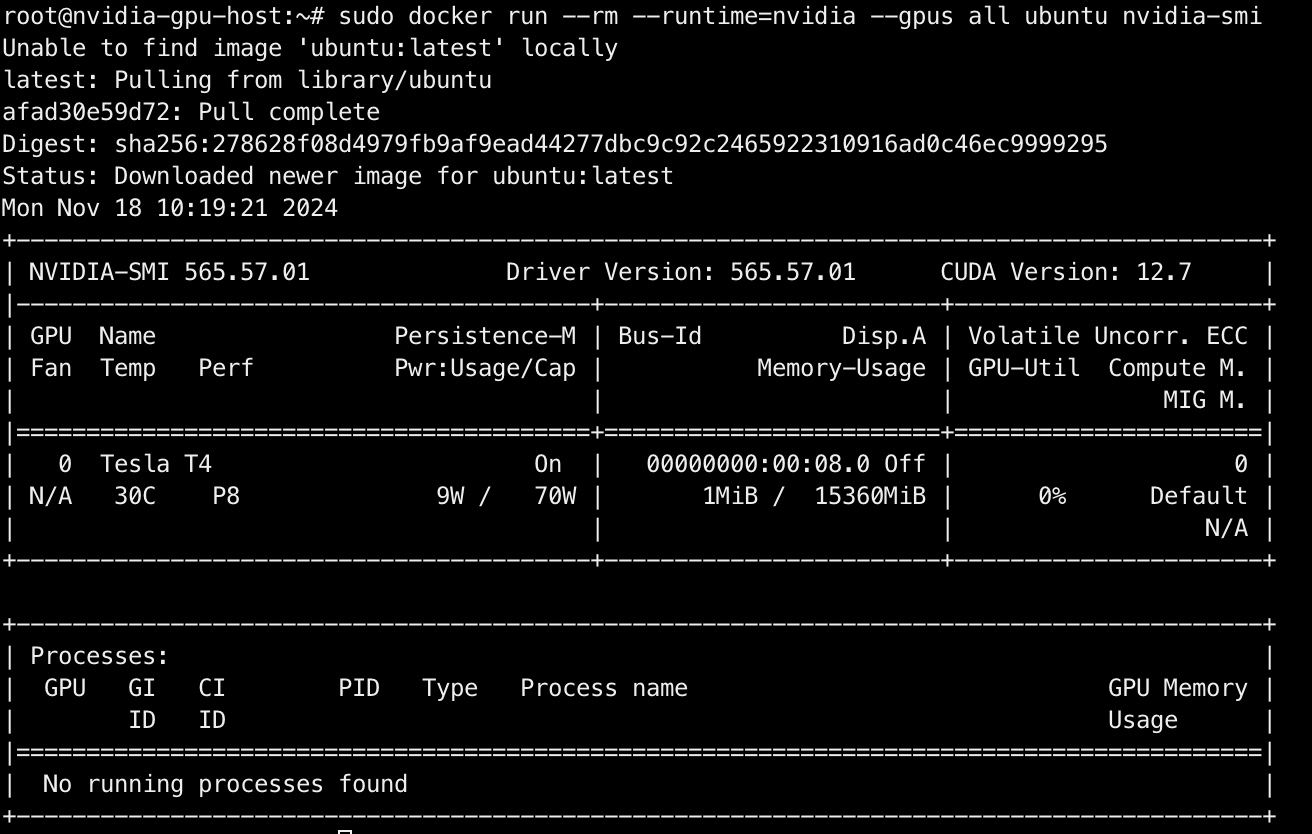

xxxxxxxxxxsudo docker run --rm --runtime=nvidia --gpus all ubuntu nvidia-smi

Installing GPUStack

Refer to: https://docs.gpustack.ai/latest/installation/docker-installation/

Install GPUStack with Docker:

xxxxxxxxxxdocker run -d --gpus all -p 80:80 --ipc=host --name gpustack \ -v gpustack-data:/var/lib/gpustack gpustack/gpustackTo view the login password, run the following command:

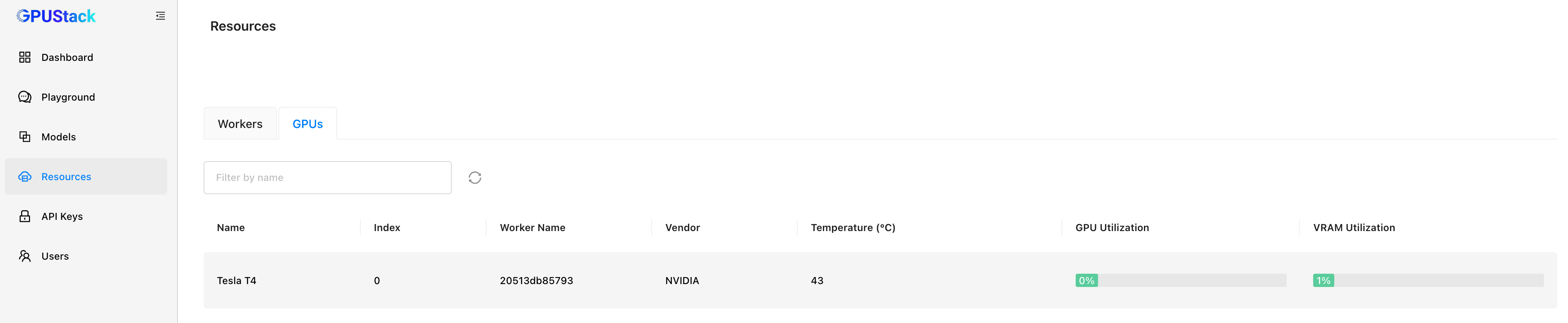

xxxxxxxxxxdocker exec -it gpustack cat /var/lib/gpustack/initial_admin_passwordAccess the GPUStack UI ( http://YOUR_HOST_IP ) in your browser, using admin as the username and the password obtained above. After resetting your password, log into GPUStack.

To add additional worker nodes and form a GPUStack cluster, please run the following command on each worker node:

xxxxxxxxxxdocker run -d --gpus all --ipc=host --network=host --name gpustack \ gpustack/gpustack --server-url http://YOUR_HOST_IP --token YOUR_TOKENReplace http://YOUR_HOST_IP with your GPUStack server URL and YOUR_TOKEN with your secret token for adding workers. To retrieve the token from the GPUStack server, use the following command:

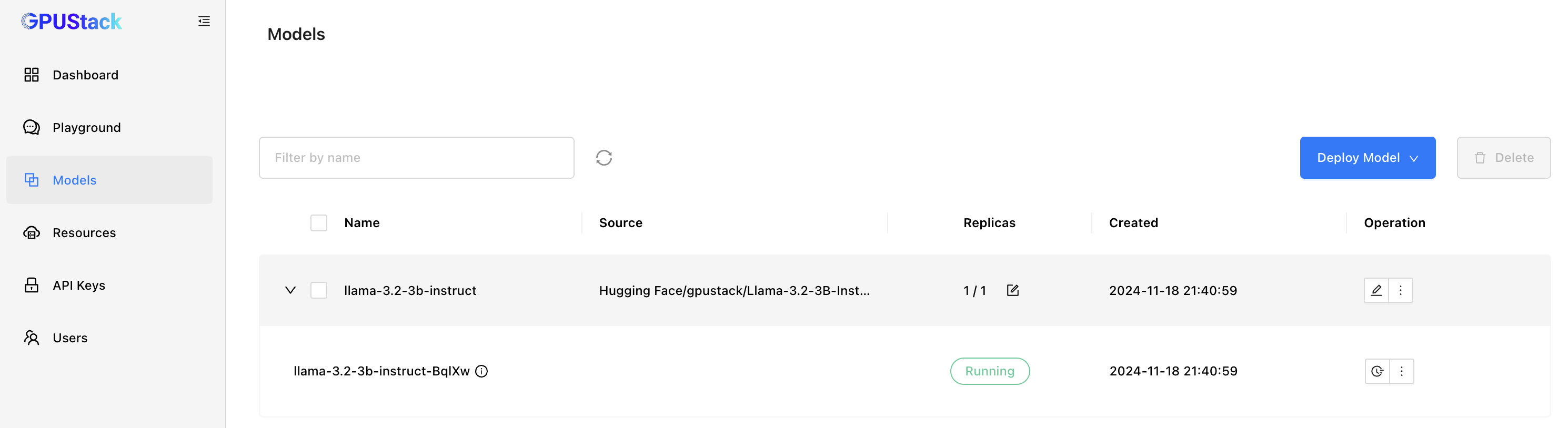

xxxxxxxxxxdocker exec -it gpustack cat /var/lib/gpustack/tokenAfter that, deploy models from the Hugging Face:

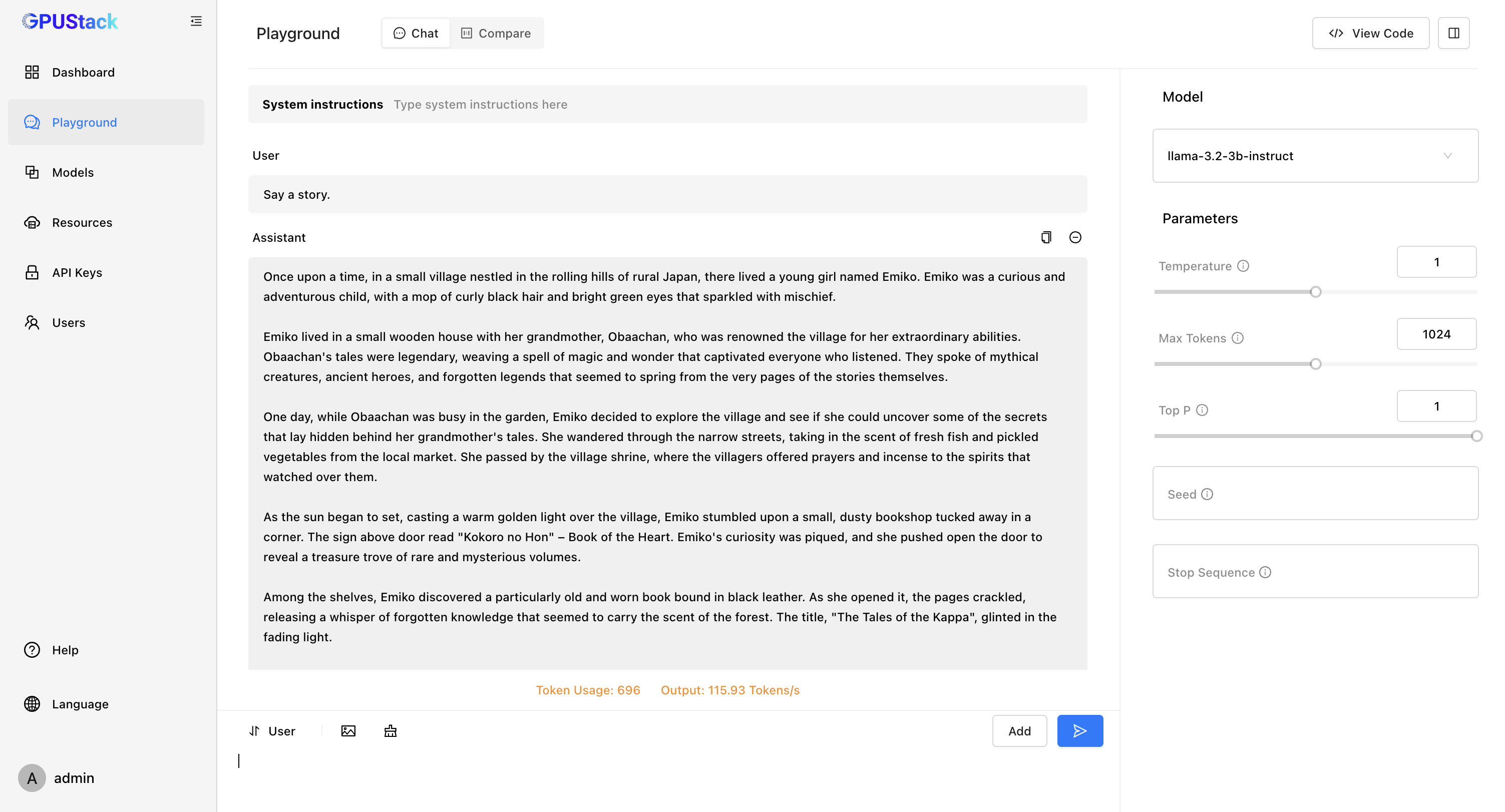

Experimenting with the model in Playground:

Join Our Community

In this tutorial, we introduced how to set up the NVIDIA container runtime and deploy GPUStack with Docker.

If you are interested in GPUStack, please find more information at: https://gpustack.ai.

If you encounter any issues or have suggestions for GPUStack, feel free to join our Community for support from the GPUStack team and to connect with fellow users globally.