Optimized AI Inference

on Your GPU

GPUStack unlocks the full potential of your hardware with intelligent optimization for LLM deployment and inference.

The Challenge of LLM Deployment

Open-source inference engines deliver state-of-the-art performance, but require significant expertise and effort to unlock their full potential. GPUStack solves this complexity.

Without Optimization

Manual tuning, complex configurations, and suboptimal performance across different hardware platforms.

With GPUStack

Automated optimization that delivers significant performance gains out of the box.

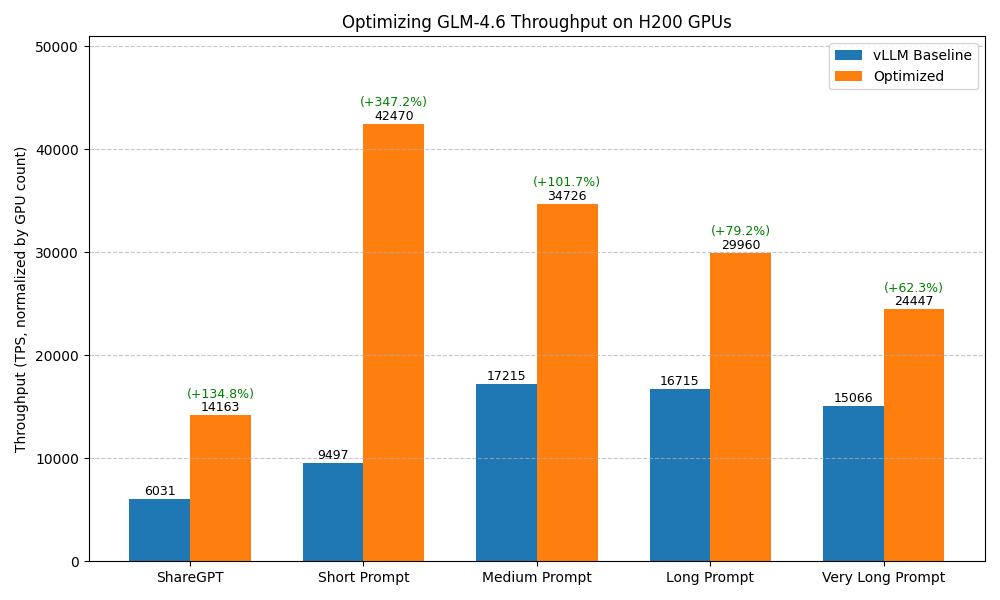

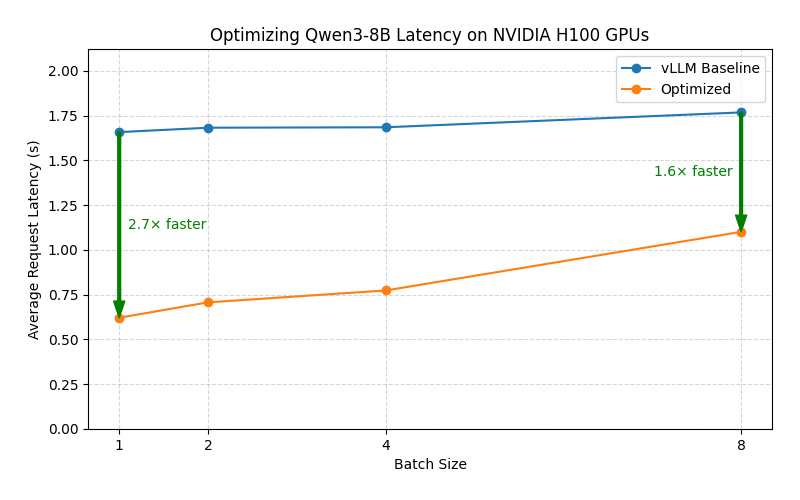

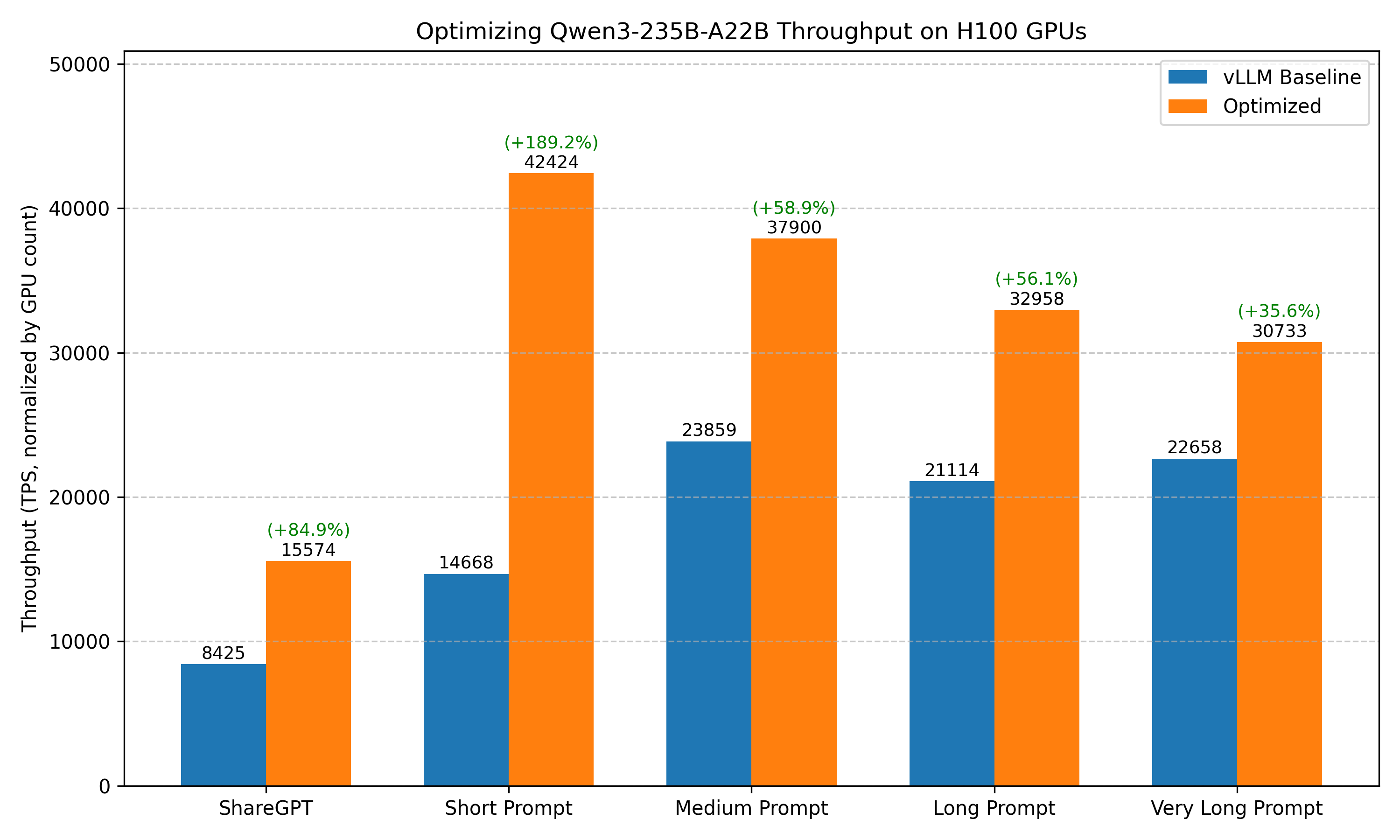

Performance Benchmarks

GPUStack tuned deployment achieves significant performance gains compared to unoptimized vLLM baseline.

Tailored for Every Use Case

Different scenarios demand different optimization strategies. GPUStack provides flexible deployment modes.

Throughput Mode

Optimized for high throughput under high request concurrency. Perfect for batch processing and high-volume APIs.

Latency Mode

Optimized for low latency under low request concurrency. Ideal for real-time interactive applications.

Standard Mode

Runs at full precision and prioritizes compatibility. Ensures maximum model accuracy and stability.

Custom Mode

Fully customizable optimization parameters tailored to your specific requirements and constraints.

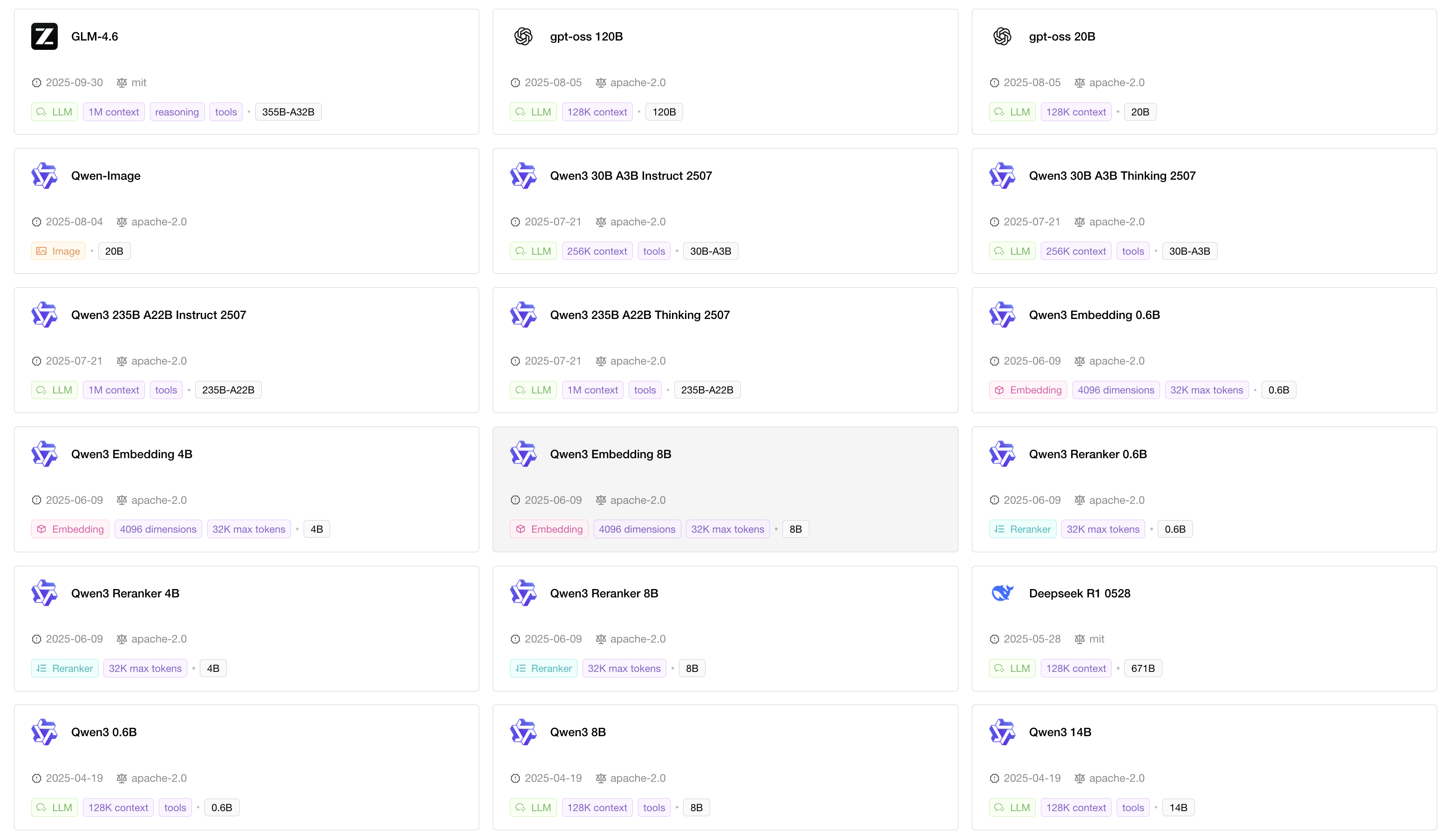

Fast Innovation with AI

Pluggable backend and engine support lets you run state-of-the-art open-source models from day one.

DeepSeek

Qwen

GLM

Kimi

Llama

Mistral

Gemma

Phi

Scalable GPU Clusters

Deploy across any infrastructure, anywhere in the world.

On-Premise

Leverage your existing GPU servers with full control over your infrastructure.

Kubernetes

Deploy on any Kubernetes cluster with seamless orchestration and management.

Multi-Cloud

AWS, DigitalOcean, Alibaba Cloud, and more — dynamically scale GPU resources.

Monitor, Measure, Optimize

GPUStack gives you the power to see everything your models do. From real-time performance metrics to historical trends, track every inference, every millisecond, and every resource your LLMs consume.

Real-time Metrics

Live performance tracking

Historical Trends

Performance analytics

Resource Usage

GPU/CPU utilization

Optimization

Automated tuning

Management & Collaboration

GPUStack enables teams to efficiently manage models, inference engines, and compute resources while collaborating seamlessly.

Team Management

Unified Management Interface

From GPU clusters to users and API keys, keep everything organized and under control.

Enterprise Ready

GPUStack provides the enterprise capabilities needed to deploy LLMs at scale with confidence.

SSO Integration

Seamless enterprise login and identity management

Access Control

Manage who can access or modify models within your team

Token Quotas

Control usage organization-wide with rate limits

High Availability

Ensure uninterrupted model service with failover

Ready to Optimize Your AI Inference?

Join thousands of developers and enterprises already using GPUStack to deploy LLMs at scale.